Deployment Methodologies

Developers getting ready to create their first application in the cloud can look to a number of rules that are generally accepted for successfully creating applications that run exclusively in the public cloud.

Several years ago, Heroku cofounder Adam Wiggins released a suggested blueprint for creating native software as a service (SaaS) application hosted in the public cloud called the Twelve-Factor App Methodology. These guidelines can be viewed as a set of best practices to consider using when deploying applications in the cloud. Of course, depending on your deployment methods, you may quibble with some of the rules—and that’s okay. There are many methodologies available to deploy applications. There are also many complementary management services hosted at AWS that greatly speed up the development process, regardless of the model used.

The development and operational model that you choose to embrace will follow one of these development and deployment paths.

Before deploying applications in the cloud, you should carefully review your current development process and perhaps consider taking some of the steps in the Twelve-Factor App Methodology, which are described in the following sections. Your applications that are hosted in the cloud also need infrastructure; as a result, these rules for proper application deployment in the cloud don’t stand alone; cloud infrastructure is also a necessary part of the rules. The following sections look at the 12 rules of the Twelve-Factor App Methodology from an infrastructure point of view and identify the AWS services that can help with each rule. This information can help you understand both the rules and the AWS services that can be useful in application development.

Rule 1: Use One Codebase That Is Tracked with Version Control to Allow Many Deployments

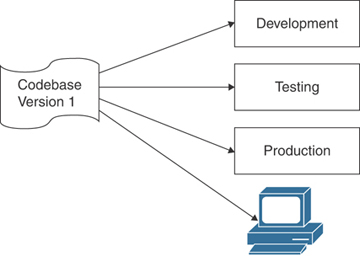

In development circles, this rule is non-negotiable; it must be followed. Creating an application usually involves three separate environments: development, testing, and production (see Figure 3-22). The same codebase should be used in each environment, whether it’s the developer’s laptop, a set of testing server EC2 instances, or the production EC2 instances. Operating systems, off-the-shelf software, dynamic-link libraries (DLLs), development environments, and application code are always defined and controlled by versions. Each version of application code needs to be stored separately and securely in a safe location. Multiple AWS environments can take advantage of multiple availability zones and multiple VPCs.

FIGURE 3-22 One Codebase, Regardless of Location

Developers typically use code repositories such as GitHub to store their code. As your codebase undergoes revisions, each revision needs to be tracked; after all, a single codebase might be responsible for thousands of deployments, and documenting and controlling the separate versions of the codebase just makes sense. Amazon has a code repository, called CodeCommit, that may be more useful than Git for applications hosted at AWS.

At the infrastructure level at Amazon, it is important to consider dependencies. The AWS infrastructure components to keep track of include the following:

AMIs: Images for web, application, database, and appliance instances. AMIs should be version controlled.

EBS volumes: Boot volumes and data volumes should be tagged by version number for proper identification and control.

EBS snapshots: Snapshots used to create boot volumes are part of the (AMI).

Containers: Each container image should be referenced by its version number.

AWS CodeCommit

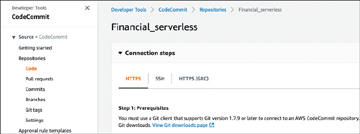

CodeCommit is a hosted AWS version control service with no storage size limits (see Figure 3-23). It allows AWS customers to privately store their source and binary code, which are automatically encrypted at rest and at transit, at AWS. CodeCommit allows customers to store code versions at AWS rather than at Git without worrying about running out of storage space. CodeCommit is also HIPAA eligible and supports Payment Card Industry Data Security Standard (PCI DSS) and ISO 27001 standards.

FIGURE 3-23 A CodeCommit Repository

CodeCommit supports common Git commands and, as mentioned earlier, there are no limits on file size, type, and repository size. CodeCommit is designed for collaborative software development environments. When developers make multiple file changes, CodeCommit manages the changes across multiple files. S3 buckets also support file versioning, but S3 versioning is really meant for recovery of older versions of files; it is not designed for collaborative software development environments; as a result, S3 buckets are better suited for files that are not source code.

Rule 2: Explicitly Declare and Isolate Dependencies

Any application that you have written or will write depends on some specific components, such as a database, a specific operating system version, a required utility, or a software agent that needs to be present. You should document these dependencies so you know the components and the version of each component required by the application. Applications that are being deployed should never rely on the assumed existence of required system components; instead, each dependency needs to be declared and managed by a dependency manager to ensure that only the defined dependencies will be installed with the codebase. A dependency manager uses a configuration file to determine what dependency to get, what version of the dependency to get, and what repository to get it from. If there is a specific version of system tools that the codebase always requires, perhaps the system tools could be added to the operating system that the codebase will be installed on. However, over time, software versions for every component will change. An example of a dependency manager could be Composer, which is used with PHP projects, or Maven, which can be used with Java projects. Another benefit of using a dependency manager is that the versions of your dependencies will be the same versions used in the development, testing, and production environments.

If there is duplication with operating system versions, the operating system and its feature set can also be controlled by AMI versions, and CodeCommit can be used to host the different versions of the application code. CloudFormation also includes a number of helper scripts that can allow you to automatically install and configure applications, packages, and operating system services that execute on EC2 Linux and Windows instances. The following are a few examples of these helper scripts:

cfn-init: This script can install packages, create files, and start operating system services.

cfn-signal: This script can be used with a wait condition to synchronize installation timings only when the required resources are installed and available.

cdn-get-metadata: This script can be used to retrieve metadata from the EC2 instance’s memory.

Rule 3: Store Configuration in the Environment

Your codebase should be the same in the development, testing, and production environments. However, your database instances or your S3 buckets will have different paths, or URLs, used in testing or development. Obviously, a local database shouldn’t be stored on a compute instance operating as a web server or as an application server. Other configuration components, such as API keys, plus database credentials for access and authentication, should never be hard-coded. You can use AWS Secrets for storing database credentials and secrets, and you can use IAM roles for accessing data resources at AWS, including S3 buckets, DynamoDB tables, and RDS databases. You can use API Gateway to store your APIs.

Development frameworks define environment variables through the use of configuration files. Separating your application components from the application code allows you to reuse your backing services in different environments, using environment variables to point to the desired resource from the development, testing, or production environment. Amazon has a few services that can help centrally store application configurations:

AWS Secrets: This service allows you to store application secrets such as database credentials, API keys, and OAuth tokens.

AWS Certificate Manager (ACM): This service allows you to create and manage public Secure Sockets Layer/Transport Layer Security (SSL/TLS) certificates used for any hosted AWS websites or applications. ACM also enables you to create a private certificate authority and issue X.509 certificates for identification of IAM users, EC2 instances, and AWS services.

AWS Key Management Services: This service can be used to create and manage encryption keys.

AWS Systems Manager Parameter Store: This service stores configuration data and secrets for EC2 instances, including passwords, database strings, and license codes.

Rule 4: Treat Backing Services as Attached Resources

All infrastructure services at AWS can be defined as backing services, and AWS services can be accessed by HTTPS private endpoints. Backing services hosted at AWS are connected over the AWS private network and include databases (for example, Relational Database Service [RDS], DynamoDB), shared storage (for example, S3 buckets, Elastic File System [EFS]), Simple Mail Transfer Protocol (SMTP) services, queues (for example, Simple Queue Service [SQS]), caching systems (such as ElastiCache, which manages Memcached or Redis in-memory queues or databases), and monitoring services (for example, CloudWatch, Config, CloudTrail).

Under certain conditions, backing services should be completely swappable; for example, a MySQL database hosted on premises should be able to be swapped with a hosted copy of the database at AWS without requiring a change to application code; the only variable that needs to change is the resource handle in the configuration file that points to the database location.

Rule 5: Separate the Build and Run Stages

If you are creating applications that will be updated, whether on a defined schedule or at unpredictable times, you will want to have defined stages during which testing can be carried out on the application state before it is approved and moved to production. Amazon has several such PaaS services that work with multiple stages. As discussed earlier in this chapter, Elastic Beanstalk allows you to upload and deploy your application code combined with a configuration file that builds the AWS environment and deploys your application.

The Elastic Beanstalk build stage could retrieve your application code from the defined repo storage location, which could be an S3 bucket. Developers could also use the Elastic Beanstalk CLI to push your application code commits to AWS CodeCommit. When you run the CLI command EB create or EB deploy to create or update an EBS environment, the selected application version is pulled from the defined CodeCommit repository, and the application and required environment are uploaded to Elastic Beanstalk. Other AWS services that work with deployment stages include the following:

AWS CodePipeline: This service provides a continuous delivery service for automating deployment of your applications using multiple staging environments.

AWS CodeDeploy: This service helps automate application deployments to EC2 instances hosted at AWS or on premises.

AWS CodeBuild: This service compiles your source code, runs tests on prebuilt environments, and produces code ready to deploy without your having to manually build the test server environment.

Rule 6: Execute an App as One or More Stateless Processes

Stateless processes provide fault tolerance for the instances running your applications by separating the important data records being worked on by the application and storing them in a centralized storage location such as an SQS message queue. An example of a stateless design using an SQS queue could be a design in which an SQS message queue is deployed as part of the workflow to add a corporate watermark to all training videos uploaded to an associated S3 bucket (see Figure 3-24). A number of EC2 instances could be subscribed to the watermark SQS queue; every time a video is uploaded to the S3 bucket, a message is sent to the watermark SQS queue. The EC2 servers subscribed to the SQS queue poll for any updates to the queue; when an update message is received by a subscribed server, the server carries out the work of adding a watermark to the video and then stores the video in another S3 bucket.

FIGURE 3-24 Using SQS Queues to Provide Stateless Memory-Resident Storage for Applications

Others stateless options available at AWS include the following:

AWS Simple Notification Services: This hosted messaging service allows applications to deliver push-based notifications to subscribers such as SQS queues or Lambda.

Amazon MQ: This hosted managed message broker service specifically designed for Apache Active MQ is an open-source message broker service that provides functionality similar to that of SQS queues.

AWS Simple Email Service: This hosted email-sending service includes an SMTP interface that allows you to integrate the email service into your application for communicating with an end user.

Let’s look at an example of how these services can solve availability and reliability problems. Say that a new employee at your company needs to create a profile on the first day of work. The profile application runs on a local server, and each new hire needs to enter pertinent information. Each screen of information is stored within the application running on the local server until the profile creation is complete. This local application is known to fail without warning, causing problems and wasting time. You decide to move the profile application to the AWS cloud, which requires a proper redesign with hosted components to provide availability and reliability by hosting the application on multiple EC2 instances behind a load balancer in separate availability zones. Components such as an SQS queue can retain the user information in a redundant data store. Then, if one of the application servers crashes during the profile creation process, another server takes over, and the process completes successfully.

Data that needs to persist for an undefined period of time should always be stored in a redundant storage service such as a DynamoDB database table, an S3 bucket, an SQS queue, or a shared file store such as EFS. When the user profile creation is complete, the application can store the relevant records and can communicate with the end user by using Amazon Simple Email Service (SES).

Rule 7: Export Services via Port Binding

Instead of using a local web server installed on a local server host and accessible only from a local port, you should make services accessible by binding to external ports where the services are located and accessible using an external URL. For example, all web requests can be carried out by binding to an external port, where the web service is hosted and from which it is accessed. The service port that the application needs to connect to is defined by the development environment’s configuration file (see the section “Rule 3: Store Configuration in the Environment,” earlier in this chapter). The associated web service can be used multiple times by different applications and the different development, testing, and production environments.

Rule 8: Scale Out via the Process Model

If your application can’t scale horizontally, it’s not designed for dynamic cloud operation. Many AWS services, including these, are designed to automatically scale horizontally:

EC2 instances: Instances can be scaled with EC2 Auto Scaling and CloudWatch metric alarms.

Load balancers: The ELB load balancer infrastructure horizontally scales to handle demand.

S3 storage: The S3 storage array infrastructure horizontally scales in the background to handle reads.

DynamoDB: DynamoDB horizontally scales tables within an AWS region. Tables can also be designed as global tables, which can scale across multiple AWS regions.

Rule 9: Maximize Robustness with Fast Startup and Graceful Shutdown

User session information can be stored in Amazon ElastiCache or in in-memory queues, and application state can be stored in SQS message queues. Application configuration and bindings, source code, and backing services are hosted by AWS managed services, each with its own levels of redundancy and durability. Data is stored in a persistent packing storage location such as S3 buckets, RDS, or DynamoDB databases (and possibly EFS or FSx shared storage). Applications with no local dependencies and integrated hosted redundant services can be managed and controlled by a number of AWS management services.

A web application hosted on an EC2 instance can be ordered to stop listening through Auto Scaling or ELB health checks.

Load balancer failures are redirected using Route 53 alias records to another load balancer, which is assigned the appropriate elastic IP (EIP) address.

The RDS relational database primary instance automatically fails over to the standby database instance. The primary database instance is automatically rebuilt.

DynamoDB tables are replicated a minimum of six times across three availability zones throughout each AWS region.

Spot EC2 instances can automatically hibernate when resources are taken back.

Compute failures in stateless environments return the current job to the SQS work queue.

Tagged resources can be monitored by CloudWatch alerts using Lambda functions to shut down resources.

Rule 10: Keep Development, Staging, and Production as Similar as Possible

With this rule, similar does not refer to the number of instances or the size of database instances and supporting infrastructure. Your development environment must be exact in the codebase being used but can be dissimilar in the number of instances or database servers being used. Aside from the infrastructure components, everything else in the codebase must remain the same. CloudFormation can be used to automatically build each environment using a single template file with conditions that define what infrastructure resources to build for each development, testing, and production environment.

Rule 11: Treat Logs as Event Streams

In development, testing, and production environments, each running process log stream must be stored externally. At AWS, logging is designed as event streams. CloudWatch logs or S3 buckets can be created to store EC2 instances’ operating system and application logs. CloudTrail logs, which track all API calls to the AWS account, can also be streamed to CloudWatch logs for further analysis. Third-party monitoring solutions support AWS and can interface with S3 bucket storage. All logs and reports generated at AWS by EC2 instances or AWS managed services eventually end up in an S3 bucket.

Rule 12: Run Admin/Management Tasks as One-Off Processes

Administrative processes should be executed using the same method, regardless of the environment in which the administrative task is executed. For example, an application might require a manual process to be carried out; the steps to carry out the manual process must remain the same, whether they are executed in the development, testing, or production environment.

The goal in presenting the rules of the Twelve-Factor App Methodology is to help you think about your applications and infrastructure and, over time, implement as many of the rules as possible. This might be an incredibly hard task to do for applications that are simply lifted and shifted to the cloud. Newer applications that are completely developed in the cloud should attempt to follow these rules as closely as possible.